2. RCM Virtual Machine

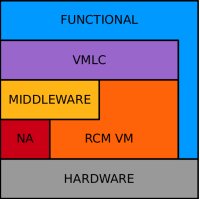

Figure 2.1: Overview of

an ASSERT software system

The RCM Virtual Machine (VM) is an

abstraction of the execution platform on which ASSERT applications run

and it is a central concept to the entire HRT-UML/RCM methodology.

Figure 2.1 on the left illustrates the layered architecture of an

ASSERT

software system.

(Some explanatory words are in order to discuss the

intended meaning of the illustration in Figure 2.1. The attentive

reader may notice that the functional component of the software system

depicted in Figure 2.1 reaches down to the hardware directly. The intent of

this representation is very innocent and simply wants to reflect the

fact that some elements of the hardware platform may be directly

visible to functional models for reasons that trade transparency and

portability for speed of access. The general philosophy used in ASSERT

does indeed discourage, but not prohibits, the recourse to direct

access of functional models to hardware. A further observation is in

order with regard to the Middleware box in Figure 2.1, which is shown

to be external to the RCM VM, which in fact it is, and below the VMLC

layer. In actual fact, the Middleware part of the ASSERT software

system is realized in terms of "primitive" VMLC components which run

like any other VMLC on top the RCM VM and use the support of Network

Access, NA, components to cater for distribution-transparent

communications to the application components included in the Functional

component of the system. VMLC, otherwise known as Virtual Machine level

containers, are the sole run-time entities allowed to operate

on the RCM Virtual Machine. As a result of a complex transformation

process VMLC )

A system modeled with HRT-UML/RCM encompasses multiple layers: the whole stack of layers is described here for the reader to get some insight on the mechanism of the run-time structures on which the methodology rests. In fact, the designer need not to be aware of all the layers and all the related run-time mechanisms to benefit from the methodology, since all those details are (intentionally) hidden away from her/him at design time.

The bottom layer is the physical layer, comprised of a network of interconnected computational nodes. On top of the physical layer lies the RCM Virtual Machine, which provides run-time mechanisms required to implement the Ravenscar Computational Model (for instance, run-time enforcement and consistency checks, resource locking, synchronization and scheduling). The physical layer is accessed by the RCM Virtual Machine (in so far as local physical resources are concerned) and by the Network Access module, termed "NA" in the figure. The role of the NA module is to implement the protocol stack which allows applications to transparently communicate across the network. Although the NA module provides the basic communication services, in HRT-UML/RCM the application software is not allowed to access the network directly: in order to cater for distribution transparency, communication across remote nodes is is always mediated by a "Middleware" layer. The discussion of the operation of the Middleware presently falls outside the scope of this tutorial. Suffice it to say for the moment that all of the internal components of that layer are designed and implemented in full compliance with the RCM; add no semantics to the node-bound computation and handle remote communication in a form which can be regarded as a "distributed RCM". The RCM Virtual Machine is inflexible (as opposed to permissive) in hosting, executing and actively policing the run-time behaviour of the entities that may legally exist and operate in it.

HRT-UML/RCM guarantees valuable software properties by controlling all distributed and concurrent behaviour through the RCM Virtual Machine and the Middleware. Application-level software is build in compliance with specific rules; in particular, all functional code and data must be encapsulated into VMLC. We shall see below that VMLC are automatically built out from transformations of the PIM.

The RCM Virtual Machine concept entails the following features:

- it is a run-time environment that only accepts and supports "legal" entities; the sole legal entities that may run on it are VMLC, described in section Concurrency View; no other run-time entity is permitted to exist and no other can thus be assumed in the model;

- it provides run-time services that assist VMLC in actively

preserving their stipulated properties; mechanisms and services of

interest may for instance enable one to:

- accurately measure the actual execution time consumed by individual threads of control over a given span of activity

- attach and replenish a monitored execution-time budget to a thread, and then raise an exception when a budget violation should occur;

- segregate threads into distinct groups, attaching a monitored execution-time budget to individual groups, to be handled in the same way as for threads;

- enforce the minimum inter-arrival time stipulated for sporadic threads;

- build fault containment regions around individual threads and groups thereof;

- attain distribution and replication transparency in inter-thread communication;

- it is bound to a compilation system that only produces executable

code for "legal" entities and rejects the non-conforming ones; run-time

checks provided by the Virtual Machine shall cover the extent of

enforcement that cannot be exhaustively achieved at compile and link

time; the details of the checks to be performed to warrant preservation

of the computational model constraints, whether statically or at run

time, are given in [BDV03];

- the number of threads within the system is fixed at design time, and thus no thread may be created at run-time;

- dynamic allocation of memory is not permitted;

- it realizes a concurrent computational model provably amenable to static analysis; the model must permit threads to interact with one another (by some form of synchronization mediated by intermediary non-threaded entities) in ways that do not incur non determinism.

Computational Model

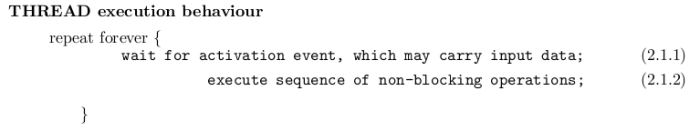

The computational model entailed by the Virtual Machine directly stems from the Ravenscar Profile [BDV03]. It assumes concurrent threads of execution, scheduled by preemptive priority-based dispatching policy, which can intercommunicate - for data-oriented synchronization purposes - by means of monitor-like structures with access protocols based on priority ceiling emulation[GS88] and with support for both exclusion and avoidance synchronization*. The system is made of a finite, statically-defined number of non-terminating THREADs, which infinitely repeat the following execution behaviour:

THREADs do not have internal (data) state of their own. Instead, they access data that may be embedded in the same container as the THREAD's or else global to the system. At step (2.1.1) of execution a THREAD (logically) draws input from the system state while in step (2.1.2) it may read from and / or write to any particular (data) state, whether local or global. Owing to the effect of priority ceiling emulation any write access performed by a THREAD to a shared resource fully takes effect (i.e. commits) before any other THREAD may get to it. The execution stage at step 2.1.2 must only include non-blocking operations (i.e. operations that do not perform self-suspension and / or invocations that may involve conditional wait in access to resource protected by avoidance synchronization). As a direct consequence of this restriction, step 2.1.1 represents the single blocking invocation that may be made by THREADs.

Execution Timing

THREADs issue jobs (i.e., instances of execution) at either a fixed rate (in which case the issuing THREAD is called "Periodic" or "Cyclic") or sporadically, with a stipulated minimum time separation between subsequent activations (in which case the issuing THREAD is termed "Sporadic"). Therefore, THREADs are either Cyclic or Sporadic in accordance with the nature of the source of the activation events. The source may be attached to the system clock for a periodic event or else to some other system activity for a sporadic event. Cyclic THREADs inherit a "Period" attribute from the rate attribute specified for the relevant source. Sporadic THREADs inherit a "Minimum Separation" attribute from the corresponding attribute specified for the designated source of it. During execution the Virtual Machine polices that the timing behaviour of all THREADs proceeds in keeping with their respective specification. Consequently no THREADs may issue jobs more frequently than specified and no Cyclic THREAD may fail to issue a job at the next period short of a failure of the system clock.

A Deadline attribute may be attached to execution step 2.1.2 of a THREAD's specification. This attribute requires that every single activation of that THREAD must complete within the specified time interval. A THREAD is fully characterized by the functional contents of execution step 2.1.2, by the nature and arrival rate of its activation event and by the deadline that applies to the completion of every job of it.

* Exclusion synchronization is the basic run-time mechanism that warrants mutual exclusion (in either read-lock or write-lock mode) in the face of concurrent access. Avoidance synchronization is the complementary run-time mechanism that withholds granting mutually-exclusive access until specific logical conditions - which typically depend on the functional state of the shared resource - are satisfied.